The RPD file or Repository is the heart and soul of an OBIEE instance. We discussed earlier in one of our previous posts that Oracle BI has two kinds of metadata, one that exist in database tables and the other which exists in file format. The RPD file is the one of the OBIEE metadata that exists in physical file format, the other being the presentation catalog.

What does the OBIEE RPD contain ?

The OBIEE RPD is where all the metadata related to a data warehouse or a data source is stored. It basically contains all the OLAP tables which are used to populate graphs and charts, the physical joins between the data. The star schema design/BMM design which tells the BI Server how OBIEE server should fire the query to the data warehouse/database.

The Repository is mainly composed of three layers:

1.

Physical Layer

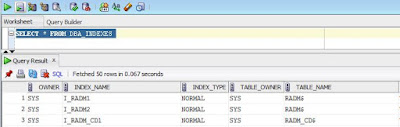

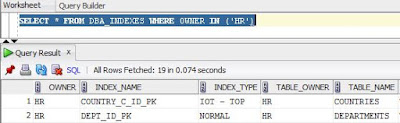

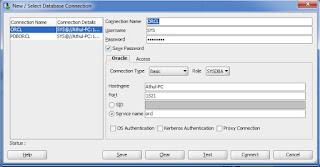

This is where you import all your Data warehouse tables. You create your database connection here along with the credentials to connect to the warehouse schema. You can also specify options like connection pooling, timeout option and various other database parameters.

Here you create Alias Tables for all the tables and define the joins between these tables. The joins defined here are inner joins. You can safely say that things relating to the database resides here.

2.

Business Model and Mapping(BMM) Layer

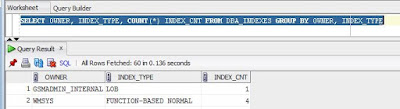

The BMM layer or Business Model and Mapping Layer is where you define the relation between the tables that you had earlier imported to your Physical Layer. Here you define your BMM diagram, create hierarchies for your dimensions define complex joins between your database tables, set aggregation for measures. You can also define complex calculations to facts here, however it is recommended to push all complex calculations to database or during the data load to the data warehouse.

Inside the RPD, arguably this is the most important layer as this is where each business process is defined in a way that OBIEE server understands. It is very crucial that the BMM model is designed properly for a compact BI Analytics implementation.

3.

Presentation Layer

The presentation layer is what the end user or the BI Author user would see when they log in to the Analytics. The BI Author or the report developer pulls data from this layer using the web UI. It is important that the dimensions and facts are properly organised in this layer so that somebody developing the report would be able to easily pull in columns and build a report.

RPD Location - You can find the RPD file under the Middleware Home folder in your OBIEE server. The exact location to find the RPD file is as below,

MW_HOME/instance/instance1/bifoundation/OracleBIServerComponent/Repository/

In this folder you will be able to find the Repositories that have been deployed over time. To get the currently used RPD name go to Enterprise Manager > CoreApplication > Deployment Tab.

Which component of OBIEE manages the RPD file ?

It is the OracleBIServer component which comes under OracleBI11g components that handles and communicates to the repository file. Every time a request comes from the Web UI(Presentation layer). The OBIEE server refers to the metadata residing in Repository file to convert the same to a Physical SQL query. The data from the SQL query is sent back to analytics for use in reports.